Assessment in education is changing as AIs such as ChatGPT and other language model bots move rapidly into everyday life. Many bots are open access, easy to use and becoming integrated into everyday tools. Think what that means for a moment: students have access to software that can help them create essays, blogs, video transcripts and reflections, suggest workflows, summarise peer review publications, etc. When constructed well, prompt outputs can give the user targeted and relevant information (although not always accurate). At the time of writing, the outputs of such bots are comparable to first-year undergraduate essays and good online exam answers.

The world of assessment has changed, so here are some practical approaches for using of AI in written assessments, rather than fighting against it.

Embrace or displace?

Proponents of AI argue that it can improve efficiency, productivity and access to information. However, others worry that AI could lead to a loss of academic standards and greater inequality and bias in information sources. Outputs are only as good as the database they draw from. Detecting the use of AI in written assessments is possible – the written style can be noticeable and the outputs on the same prompt are similar. There’s even an AI to detect the use of AI. But do we really want to do that? What’s the benefit to the student for punitive action against the use of a tool that’s fast becoming deeply embedded in search engines and word processors, and could become a critical employability skill?

Is the process or the product more important in learning?

Consider how many undergraduate essays you wrote that you still refer to? Now consider how often you use the skills you gained as an undergraduate in how to write and how to critically assess information. It was the act of creation that was important, not the product.

Spell checkers and Grammarly have already made the creation of error-free text an automated process. Online packages will perform basic statistics in an instant and give you the text to put in your figure legend. Citation managers such as RefWorks and Endnote will curate and produce your bibliography in whatever citation style you need. However, these tools are all procedural rule-following with defined prescriptive outputs. There is little critical thinking in the act of creation. Higher-level thinking is then centred on how that product is used, the references picked and the interpretation of the data. The same is true for language models – the outputs are only as good as the input prompts used to generate them, and how they are used becomes key. In terms of AI and written assessments, we are interested in the process and the act of creation rather than the product.

Assessment of the process

Assessing the process of writing over the final product has many advantages, as the way a tool has been used becomes part of the output. There are also benefits around tackling academic integrity by having an assessment that builds and works to the final article, where you can track who created it and how. This approach can be used in an individual literature review assessment where each student is given a topic that interests them and is directly linked to their final research project. There are two learning objectives with this assessment:

- To engage with literature relevant to the future research project.

- To develop critical thinking skills required for the creation of a review article.

The assessment has a 3,000-word limit, with 2,000 of those words given to the process. Students complete a template detailing the search criteria they’ve used, the databases accessed and the prompts inputted into bots such as ChatGPT. They’re then required to critically evaluate the information gathered from the databases against inclusion and exclusion criteria, and detail how that information will be used in the final written product.

| Which AI did you use? |

| – include a copy of your prompts here |

| Paste the original output(s) here |

| Comment on the quality, depth and rigour of the output(s) |

| – Consider the information (is it correct?) – Bias in the information (is there an alternative viewpoint?) – Identify omissions (is all the information present?) |

| Detail how you have fact-checked the output, including your peer-reviewed sources |

| How have you used these outputs to help write your literature review? |

| Justify your contribution to the final text |

Assessment of the knowledge

Our role as educators is to open the door to the language and thinking needed to generate sensible questions. The information you gain from an AI is only as good as the prompts. If you ask a silly question, you get a silly answer. Asking the right questions is an invaluable professional and personal life skill. We should assess not the product of the AI, but train the students in prompt engineering so they can probe a given topic more deeply. At the early stages of a degree, AI tools could be used to generate expanded notes around the learning materials, through this approach and the design of well-crafted prompts. Further assessments or tutorials are then based on critiquing the outputs and documenting how this was done.

Top and tail with AI

Language models can assist in writing an essay by providing suggestions for structure, content and language. It removes that dreaded blank page and gives a framework to edit and expand. They will:

- Provide a suggested outline, including an introduction, body paragraphs and conclusion.

- Help develop statements and the main ideas for each body paragraph.

- Give suggestions for supporting evidence and examples to include.

- Make suggestions for grammar, punctuation and clarity.

It’s important to note that tools such as ChatGPT are language models and suggestions should be reviewed and edited, and iterative prompts used to refine the structure. This is where the skill of the individual comes in, through additional information, framing and personal observation. At later stages, AI can be used to get great copy, removing redundant words and suggesting improvements for overall clarity. Final ownership, however, should come from the individual to ensure that it is their voice, experience and understanding that is being represented in the text.

Assessment of skills development

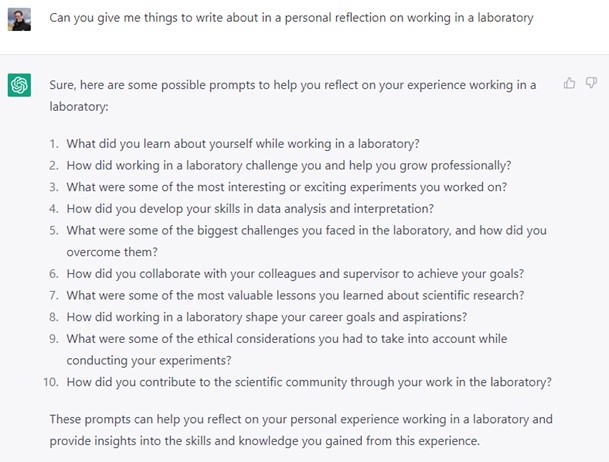

Portfolios are a cornerstone of skills development. They are based on the collection of artefacts and reflections on the experience. AI tools can attempt to write a reflection against a given prompt, but the output is generic and lacks critical feedforward and actionable elements. However, the portfolio was never a valuable item, the act of reflection and action planning was. AI tools can suggest areas to reflect on, which students often struggle with, and help structure the process. The assessment then is in the collection of artefacts, the conceptualisation of individual experience and the personalised application.

Final thoughts

The introduction of generative AI tools in education has sparked a much-needed conversation on assessment practices. We mustn’t regress to using outdated and impersonal methods such as traditional in-person exams, which have proven to not only widen the achievement gap but are also inadequate when evaluating the skills required for today’s workplace. Instead, it is essential that we innovate, adapt and evolve our approach to assessments.

Thanks to this post’s co-authors – ChatGPT and Mel Green – for their input and editing.